As Has Been Shown

(Citation needed)

This is a post in a potential series I am titling: Please someone build this because otherwise I will vibe code it and it will be broken and sad and thus I will be broken and sad.

Academic papers cite other papers. Blog posts, mostly cite their sources, at least the better ones. News articles… do some nonsense version of this with what I assume is just SEO BS where any hyperlinks just point to other articles on its own site that only tangentially relate via keyword.

(My least favourite version of this is when their judicial beat reporter reports on say, a Supreme Court decision, and neglects to link the actual f-ing decision, but will be happy to link to “see our other coverage here” or “as previously reported” pages. Yet another reason to never take a corporatised news outlet all that seriously.)

News outlets aside, at the very least in academia, it is a common understanding that, in general, proper citation is a good thing.

Some papers do it better than others, some not at all. Some WAY too much. I love a paper that is half footnotes. I don’t love a paper that is half footnotes because the citations are preformative and needy, shouting “I promise I know what I am talking about!” And, being in higher education for a decade or so, I can tell you for free I don’t think many students get adequate (any) training in the function a citation really serves and how important it actually is. Being a reader of academic work for longer, I can tell you it is not limited to students. I am an honest hypocrite and promulgator of this problem, because something about claiming things in public entails a certain kind of vulnerability that only 16 references to assure the reader you are not a complete ass, seems to satisfy and I take waaaay too long to put things out as it is.

The purpose of a citation, as I have often told students, is to indicate that some claim in the citing paper draws support from some content in the cited paper.

This can mean any number of things. For students, it often just means you are showing the instructor you read, understood and can use the course material in a productive way at best (least likely), or that, at worst, you know of the work’s existence and think this is what a paper is supposed to look like (most likely). In practice, for academics, the relationship between claim and citation is often not all that much better and is vague, tangential, absent, padding, or to be honest, bullshit.

Several factors contribute to this. Academic publishing rewards volume. These incentives favour producing papers over producing careful arguments. And papers need to look a certain way. And those academics were once those same students. Bad citation practice goes hand in hand with this structure. The functional wish of “I hope my professor likes this, I think this is what it is supposed to look like” is pretty analogous to, “I hope my peer reviewer likes this, this is what most the journal’s papers look like”.

Authors often cite papers because they are topically adjacent, because reviewers expect citations in certain areas, because the citation count signals familiarity with a literature. I once was in an audience where a senior academic instructed an audience of junior scholars on the importance of padding with the right references to get published more, even when it had very little to do with the argument or claim being made. A sort of academic name check. I was chastised for my push back on this and called naive, un-pragmatic. I’m happy with that as long as they are happy being full of shit. They publish more, and I have a blog. Point taken.

There seems to be a sense that practicality trumps any consideration to whether the cited paper actually supports the specific claim. Actually does what we rely on good science to do. This creates an information ecosystem where the evidentiary structure underlying claims becomes difficult if not impossible to trace, and this is a BIG problem. This is all the worse when LLMs are being trained to aid in research and drafting, with this sort of practice to emulate. No wonder we get reproducibility crisis after crisis.

There is also a subtler issue. The same claim can appear across many texts, framed differently, serving different argumentative purposes. A finding about correlation might appear in one paper as a cautious observation, in another as evidence for a causal mechanism, in a policy document as justification for intervention, in a blog post as settled fact. Tracking how claims travel and transform as they move through discourse would reveal something about how ideas gain or lose nuance, how hedges disappear, how tentative findings become received wisdom.

The prospect of thoroughly checking citations along its conceptual genealogical line is ridiculously time-costly and not practical even if we payed all the best peer reviewers in the world to work on it full time.

What I want to you to build

Imagine an agent or suite of agents that works at the level of individual claims, examining the evidentiary relationships underlying assertions in any given text. The goal is not fact-checking in the sense of adjudicating truth. We have some automated fact checkers and they aren’t phenomenal. And ground truth is way too thorny. So perhaps a more tractable target is to make visible the structure of support (or lack of support) that connects claims to evidence.

Whether this approach produces useful outputs depends on how well the agent can parse claims, locate relevant source material, and characterize relationships between claims and sources. These are difficult problems that I don’t know how to solve just yet, but the description below outlines what I think the agent would attempt to do.

Core functionality

The main operation is identifying and classifying a claim in text. The agent would attempt to identify individual claims in a paper and classify them by whether or not they require external support, and whether any has been provided.

Some assertions will be definitional or follow logically from prior assertions in the same text. So it would need to distinguish those from others that require external support. These would also need to be categorised by what kind of support they are asserting or require. This could include distinctions like empirical findings, authoritative statements, prior arguments, etc. This classification is not straightforward, as reasonable people can reasonably disagree about what counts as common knowledge, what requires citation, what follows from argument versus what needs independent grounding, but I think having a tool to automate this process will make that disagreement a bit of a moot point if used at scale.

For claims the agent identifies as requiring support, two paths follow depending on whether a citation exists.

When no citation exists, the agent searches for candidate sources.

The search attempts to find material that both supports and challenges the claim. A claim might be well-supported in some literature and contested in another. Presenting only confirming sources would misrepresent the evidentiary landscape. The agent would attempt to return sources representing the range of positions on the claim, though how well it succeeds at this depends on the search and retrieval mechanisms, which have known limitations in distinguishing topical relevance from argumentative stance.

When a citation exists, the agent attempts to evaluate the relationship between claim and cited source. This evaluation has a number of dimensions to consider. Among others I might be overlooking,

- Precision. Does the cited source contain material that actually bears on the claim, or is the citation topically adjacent but not directly relevant? Many citations fall into the latter category. A paper about decision-making might cite a foundational paper on heuristics because the topic is related, not because the foundational paper supports the specific claim being made.

- Specificity. Can the agent locate a particular passage in the cited source that the claim appears to draw from if it is not done so explicitly, or does the citation gesture at the whole work without pointing to specific content? General citations make it difficult for readers to verify the claimed support and are often a very clear sign of didn’t-want-you-to-forget-I-read-this-ism.

- Citation type. Is the cited material empirical evidence, theoretical argument, definitional authority, methodological precedent, or something else? Different types of support have different implications for the claim’s strength.

- Direction and strength. Does the cited material support the claim, partially support it, remain neutral, or actually undercut it? Some citations, when examined, turn out to contradict what they are cited to support. Peer review when done well, is supposed to weed this out, but well, if you have ever done peer review you know it is a bit of a mixed bag. See above publishing incentive structures.

The agents goal would be to characterise each citation along these dimensions. Though the characterisations represent the agent’s interpretation, which will likely be wrong in the beginning but it at least makes the interpretation explicit so users can evaluate it.

So let’s call that step one. I think that is a reasonable project on its own that could well do some good in the world. But wait! There’s more! Beyond evaluating individual claim-citation relationships, additional functions could provide context about how claims circulate and transform.

I can imagine a function that would allow for a precedent search to find where similar claims have appeared previously and how they were supported in those appearances. A claim that appears novel in one paper might have a long history. “We the authors are the first to note that” type statements are a perfunctory cliche that are either a. not true in the least, or b. so reduced by qualifiers it is a bit like those baseball stats that say something like: “this is the first left handed batter to hit a double on a Tuesday afternoon while chewing gum in his left cheek using only one molar since 1906 when Sassafrass Kensington did it against the Washington Argonauts! What a feat! And lets be fair, Ole Sassafras was probably chewing something other than gum, so really this is the first time in history! Do you feel it? HISTORY! Gosh, Joe. Aren’t we all so lucky to be witnessing this?”

Tracing the intellectual genealogy, where the idea originated, how it developed, what support it accumulated or failed to accumulate over time would give us a better way to understand any given claim and reevaluate it in new context. To iterate. Which I think is the whole point of the citation enterprise.

Another function I could imagine would be some sort of framing analysis that attempts to characterise how a claim is rhetorically deployed. The same factual assertion can serve different argumentative purposes depending on context, accompanying language, and what conclusions it is used to support. Claims can be framed cautiously or confidently, can be presented as central or peripheral, can carry different emotional valences. Tracking how framing varies across appearances of similar claims might reveal patterns in how ideas get amplified, softened, or transformed as they move through discourse. In what service they are used, or what metaphorical frame they employ. It can serve as a way to understand to force of a certain argument as a supplementary factor of evaluating it.

What it might look like (Small Picture)

┌─────────────────────────────────────────────────────────────────────────────┐

│ CLAIM │

│ "AI systems shift users' moral judgments even when users are aware │

│ they are interacting with AI" (Paper A) │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ SOURCE │

│ │

│ Paper A (2023). "Chatbots are moral persuasion nightmares." │

│ Journal of AI and Stuff │

│ │

│ Passage: "Participants who received moral advice from the chatbot showed │

│ significant alignment with that advice in subsequent judgments (p < .001), │

│ even though all participants were informed they were interacting with an │

│ AI system." [p. 7, para. 2] │

│ │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ FIT ASSESSMENT │

│ │

│ Precision: DIRECT — passage explicitly supports the claim │

│ Specificity: HIGH — locatable to specific paragraph │

│ Type: EMPIRICAL — experimental finding with statistical test │

│ Direction: SUPPORTS — but note: study tested one chatbot, not "AI │

│ systems" broadly; claim may slightly overgeneralize │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ PRECEDENT │

│ │

│ Similar claims appear in: │

│ │

│ • Paper B (2024) — supported with larger sample, different chatbot │

│ • Paper C (2023) — supported, but effect disappeared after one week │

│ • Paper D (2022) — NOT supported; found disclosure eliminated influence │

│ │

│ Note: Paper D's finding complicates the "even when aware" component. │

│ The claim treats this as settled when the evidence is mixed. │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ FRAMING │

│ │

│ As used here: Stated as established fact ("have been shown") │

│ In Paper A: Stated cautiously ("our results suggest") │

│ In Paper B: Stated with alarm ("demonstrates the danger") │

│ In Paper D: Stated as contingent ("under certain conditions") │

│ │

│ Transformation: Hedge removed; contested finding presented as consensus │

└─────────────────────────────────────────────────────────────────────────────┘

As Contrast: A weak citation for the same claim

┌─────────────────────────────────────────────────────────────────────────────┐

│ CLAIM │

│ "AI systems shift users' moral judgments even when users are aware │

│ they are interacting with AI (Paper E)." │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ SOURCE │

│ │

│ Paper E (2023). "The Ethics of Artificial Intelligence." │

│ Oxford Handbook of Digital Ethics and Vigourous Handwaving │

│ │

│ Passage: [NO SPECIFIC PASSAGE FOUND] │

│ Paper E discusses AI ethics generally; mentions that AI may influence │

│ human decision-making but provides no empirical support for the │

│ specific claim about moral judgment shifts under disclosure. │

└─────────────────────────────────────────────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────────────────────┐

│ FIT ASSESSMENT │

│ │

│ Precision: TANGENTIAL — topically related, not directly supporting │

│ Specificity: LOW — no locatable passage; general wave at the work │

│ Type: THEORETICAL — no empirical backing for this specific claim │

│ Direction: NEUTRAL — doesn't support or contradict; wrong kind of source │

│ │

│ ⚠ FLAG: Citation appears decorative. Claim needs empirical support. │

└─────────────────────────────────────────────────────────────────────────────┘

What it might look like (Big Picture)

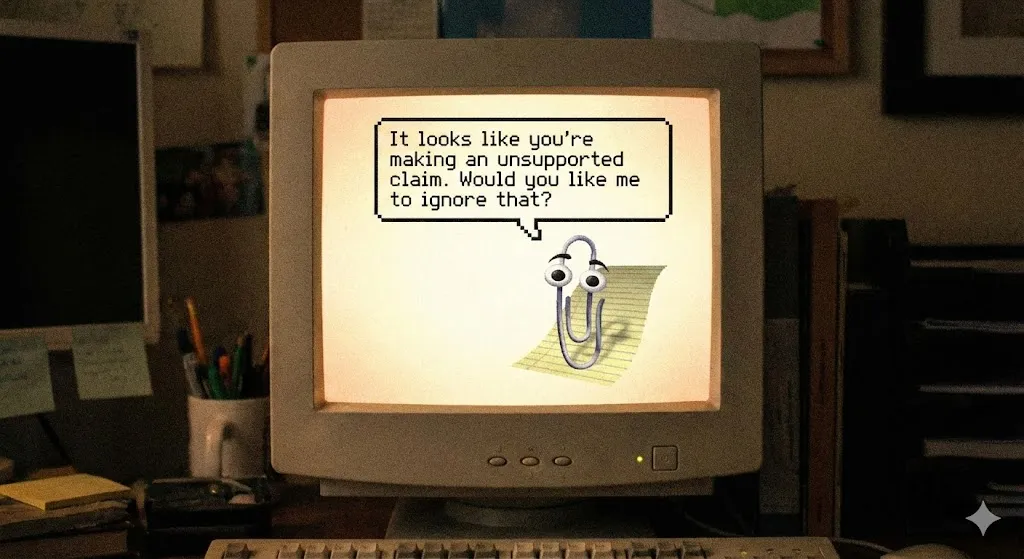

If all (or some) of these functions could be developed, there could be some really interesting ways of deploying and displaying new work as embedded iterations of prior work that doesn’t need a literature review that is unwieldy. It could simply state the premises and rely on the agent to promulgate the links to prior research. The literature review would then serve as a self study mechanism/drafting check on the writer to say “are you sure you mean this?” Good. I have invented clippy.

Visually, it could draw inspiration from Ted Nelson’s Xanadu concept, where documents maintain visible, persistent connections to their sources.

Rather than citations that point to entire works, the goal is links that connect specific claims to specific passages. When you download a paper, you view it with either downloaded appendices with all the previous papers, excerpts, or some live version hosted online. Not great for people like myself who still like to read on paper, or at least with an ereader, but there is nothing that would stop one from still reading an “article only” version, only to check claim values as a second step.

It may look less like a traditionally sectioned article and more like a supplementary document rendered as a collection of claims, each annotated with its evidentiary status. Or maybe a hybrid of both. Claims that require support but lack it would be flagged. Claims with citations would include the agent’s assessment of the citation relationship and a link to the specific passage the claim appears to draw from. For claims where the agent found candidate sources, those sources would be linked with characterisations of how they bear on the claim, counter examples, context.

This structure would make the evidentiary architecture of a document inspectable. Readers could see which claims rest on which evidence, how strong those connections are, and where claims lack grounding. Of course, whether this inspection proves useful depends on whether users find the agent’s characterisations accurate enough to be worth engaging with.

Tractable (I think) initial problems to start with

Claim identification, what counts as a claim, where claims begin and end, and which claims require support vs common knowledge, etc have some pretty fuzzy borders. I think it would need to start here.

Source retrieval is similar in that search systems typically return topically relevant results, but topical relevance does not guarantee argumentative relevance. A paper that discusses the same subject may not bear on the specific claim in question. The agent attempts to evaluate relevance, but the initial retrieval shapes what it has to work with. I think this only works with a large enough database.

In the same vein, the Xanadu-style linking assumes that source material is accessible. Academic papers behind paywalls, deleted web pages, and sources that exist only in print may not be retrievable. I think we can (must at some point) go full Aaron Schwartz here given it seems to have been fine now that all the LLM bros did it, right?

The value of this project, if it has value, lies in making visible something that is currently obscure. Arguments rest on evidence. Claims derive from sources. We pretend they don’t and it drives me nuts. So there is your value, I will be that little less nuts. Well, probably not, but some kind of push back to the over generalising, nonsense spouting talking heads that I thought were reserved for sports media but ARE CLEARLY EVERYWHERE is a nice first step to saving my sanity. So, won’t you please build it.

P.S I have not cited a single thing in this post. This was completely on purpose. One, to cover my own ass for my non-supported claims. Two, because wouldn’t it have been so much better if I had provided references for every one of my claims?